Build reliable web scrapers. Fast.

Crawlee is a web scraping library for JavaScript and Python. It handles blocking, crawling, proxies, and browsers for you.

import asyncio

from crawlee.crawlers import PlaywrightCrawler, PlaywrightCrawlingContext

async def main() -> None:

crawler = PlaywrightCrawler(

max_requests_per_crawl=10, # Limit the max requests per crawl.

headless=True, # Run in headless mode (set to False to see the browser).

browser_type='firefox', # Use Firefox browser.

)

# Define the default request handler, which will be called for every request.

@crawler.router.default_handler

async def request_handler(context: PlaywrightCrawlingContext) -> None:

context.log.info(f'Processing {context.request.url} ...')

# Extract data from the page using Playwright API.

data = {

'url': context.request.url,

'title': await context.page.title(),

}

# Push the extracted data to the default dataset.

await context.push_data(data)

# Extract all links on the page and enqueue them.

await context.enqueue_links()

# Run the crawler with the initial list of URLs.

await crawler.run(['https://crawlee.dev'])

# Export the entire dataset to a CSV file.

await crawler.export_data('results.csv')

# Or access the data directly.

data = await crawler.get_data()

crawler.log.info(f'Extracted data: {data.items}')

if __name__ == '__main__':

asyncio.run(main())

$uvx 'crawlee[cli]' create my-crawlerWhat are the benefits?

Unblock websites by default

Crawlee crawls stealthily with zero configuration, but you can customize its behavior to overcome any protection. Real-world fingerprints included.

Learn morefingerprint_generator = DefaultFingerprintGenerator(

header_options=HeaderGeneratorOptions(

browsers=['chromium', 'firefox'],

devices=['mobile'],

locales=['en-US']

),

)

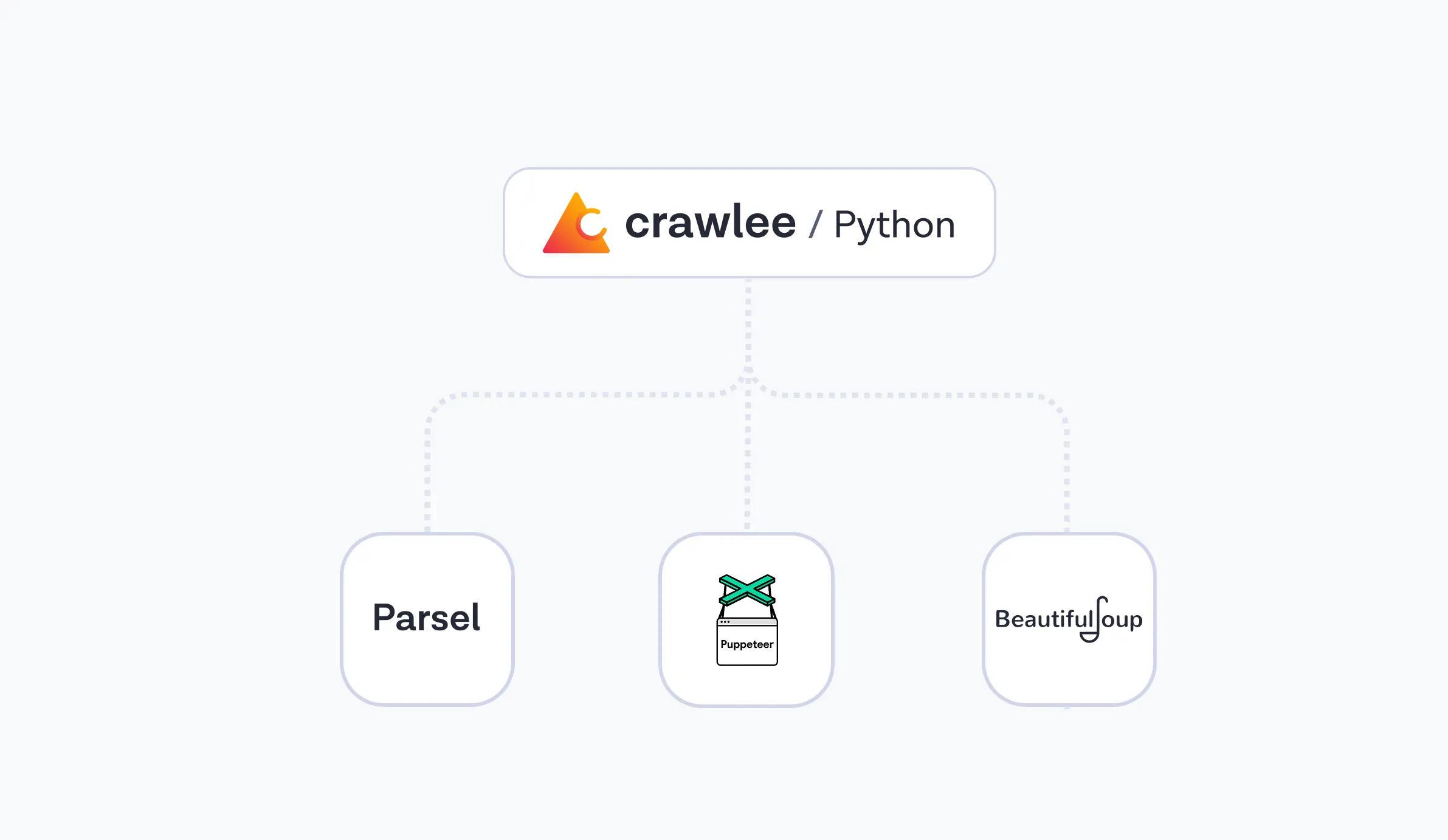

Work with your favorite tools

Crawlee integrates BeautifulSoup, Cheerio, Puppeteer, Playwright, and other popular open-source tools. No need to learn new syntax.

Learn more

One API for headless and HTTP

Switch between HTTP and headless without big rewrites thanks to a shared API. Or even let Adaptive crawler decide if JS rendering is needed.

Learn morecrawler = AdaptivePlaywrightCrawler.with_parsel_static_parser()

@crawler.router.default_handler

async def request_handler(context: AdaptivePlaywrightCrawlingContext) -> None:

prices = await context.query_selector_all('span.price')

await context.enqueue_links()

What else is in Crawlee?

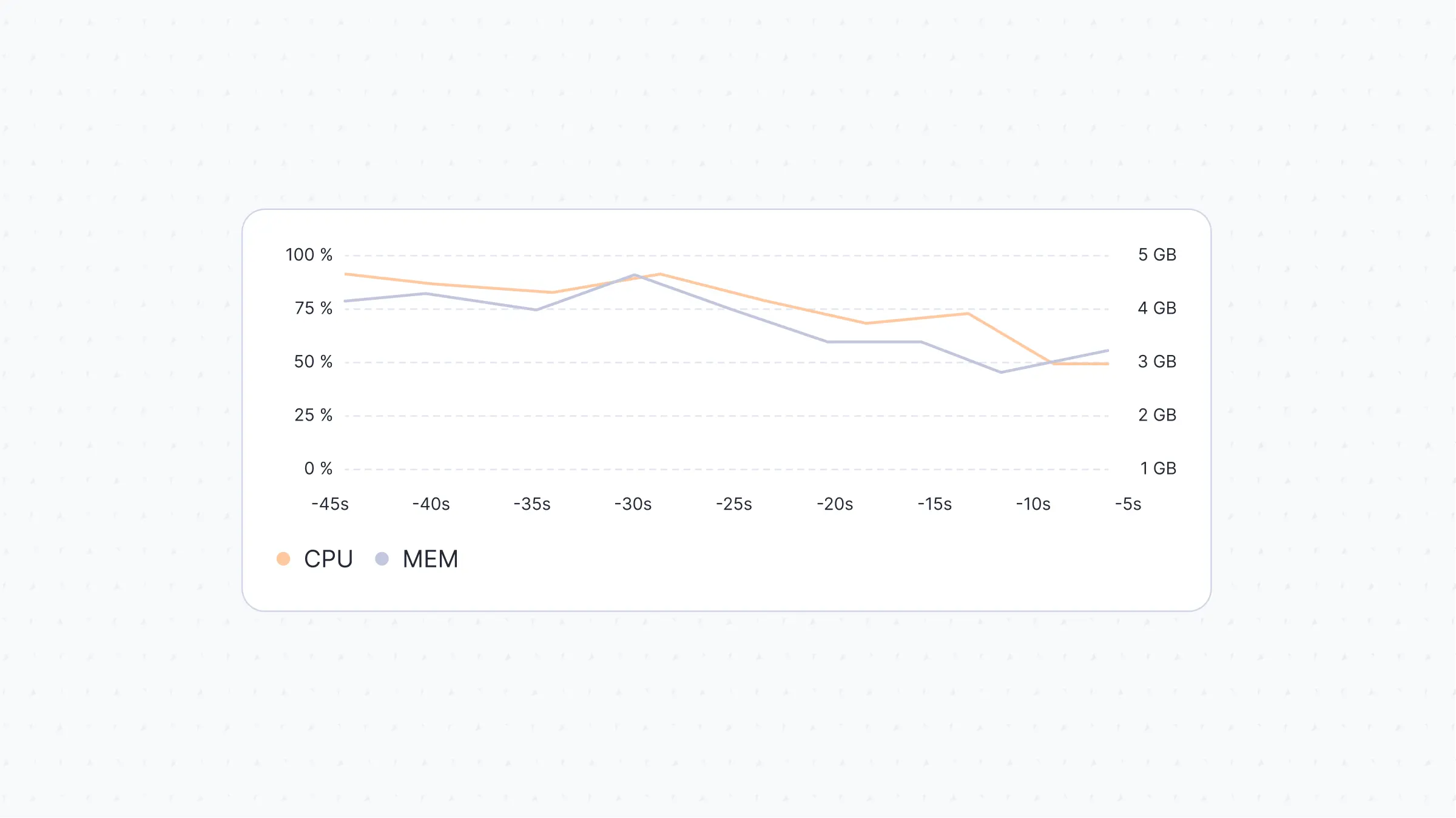

Auto scaling

Smart proxy rotation

Queue and storage

Pause and resume crawlers thanks to a persistent queue of URLs and storage for structured data.

Handy scraping utils

Sitemaps, infinite scroll, contact extraction, large asset blocking and many more utils included.

Routing & middleware

Keep your code clean and organized while managing complex crawls with a built-in router that streamlines the process.

Deploy to cloud

Actor.init()to the beginning and

Actor.exit()to the end of your code.

Crawlee helps you build scrapers faster

Zero setup required

Copy code example, install Crawlee and go. No CLI required, no complex file structure, no boilerplate.

Get startedReasonable defaults

Unblocking, proxy rotation and other core features are already turned on. But also very configurable.

Learn moreHelpful community

Join our Discord community of over 10k developers and get fast answers to your web scraping questions.

Join Discord